Developping LLM Applications with LongChain

Published:

LLM courses’ notes

[TOC]

Chapter 1 : Introduction to LongChain & Chatbot Mechanics

The LangChain ecosystem

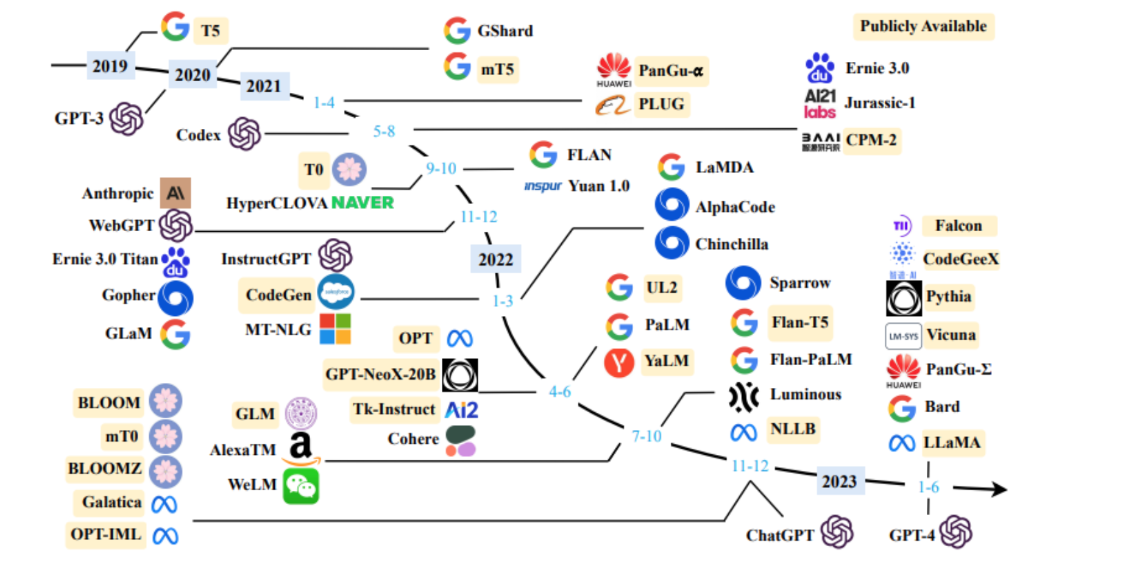

We now lived in a world where we’re spoiled for choice when it comes to choosing large language models of LLMs, for developping AI-powered applications. However, these LLMs differ based on their model architecture, training data, intended use cases, and prompting strategies. Additionally, these models ofter have to interact with other systems to retrieve data or monitor performance, which adds another layer of complexity.

What is LangChain ?

Harrison Chase created LangChain in October 2022 to change all of that. It is an open-source framework for connecting :

- LLMs

- data sources

- other functionality under a single unified syntax.

With LangChain, developpers can create scalable, modular LLM applications for almost any use.

Objectives of this course

LongChain encompasses an entire ecosystem of tools, but inthis course, we’ll focus on :

- core functionality of LangChain library.

- Chains and Tools for optimizing LLM output.

- Discussions on troubleshooting and evaluation techniques

LongChain is availiable in Python and JavaScript, but this course will only cover the Python version.

Core components of LangChain

- a language model chosen by the developper (open-source models ou closed-source models)

- prompts for tuning user inputs into model inputs

- parsers and indexers for organizing data for easy retrieval.

- chains and agents for creating workflows containing different components

Choose models

Hugging Face is huge repository of open source datasets, tools, and models !

Using language models hosted on Hugging Face is free, but using them in LangChain require a Hugging Face API token.

Creating a Hugging Face API Key :

- Sign up for a Hugging Face account

- Navigate to https://huggingface.co/settings/tokens

- Select new tokens and copy the key

To recap

- LangChain is a fantastic tool for working with natural languages.

- In the real world of development for production, it unlocks the ability for intelligent conversations with document and more abbilities for task automation, and different ways to analyze text data.

- it makes using AI easier and gives us greater control over the entire workflow.

- Advantages of LangChain : it unifies the different models from different resources into a consistent, modular workflow.

Codes

LongChain has an OpenAI class and a HuggingFaceHub class for interacting with the respective APIs.

- Hugging Face models in Langchain !

from langchain_community.llms import HuggingFaceHub

# Set your Hugging Face API token

huggingfacehub_api_token = ' '

# Define the LLM

llm = HuggingFaceHub(repo_id='tiiuae/falcon-7b-instruct', huggingfacehub_api_token=huggingfacehub_api_token)

# Predict the words following the text in question

question = 'Whatever you do, take care of your shoes'

output = llm.predict(question)

print(output)

- OpenAI models in LangChain!

from langchain_openai import OpenAI

# Set your API Key from OpenAI

openai_api_key = "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"

# Define the LLM

llm = OpenAI(model_name="gpt-3.5-turbo-instruct", openai_api_key=openai_api_key)

# Predict the words following the text in question

question = 'Whatever you do, take care of your shoes'

output = llm.predict(question)

print(output)

Prompting strategies for chatbots

Use LangChain to start implementing prompting strategies for chatbots, this will aplly both OpenAI chat models and open-source chat models from Hugging Face.

Finding the right model

Search the models section of Hugging Face and filter on Question Answering. Then, take note of the model name so it can be referenced in the code.

There are so many fine-tuned models, which are trained on domain-specific datasets.

Prompt templates

Prompt templates act as recipes for generating prompts from use inputs in a flexible and modular way (提示模板充当配方,以灵活和模块化的方式根据使用输入生成提示)

A template can include instructions, examples if we were wanting to few-shot prompt, and any additional context that might help the model complete the task.模板可以包含说明、示例(如果我们想要少量提示)以及可能帮助模型完成任务的任何其他上下文。)

Prompt templates are created using LangChain’s prompte template class. We start by creating a template string, which is structured to prompt the AI to answer a question.

from langchain.prompts import PromptTemplate

template = "You are an artificial intelligence assistant, answer the question. {question}"

prompt = PromptTemplate(template = template, input_variables = ["question"])

Here, the question field is defined for dynamic insertion later in the code. To convert this string into a prompt template for our model, we pass it to the PromptTemplate

Now we have our prompt template, let’s integrate it into a model.

Integrating PromptTemplat with LLMs : LLMChain

Pass the prompt template and model to the LLMChain function :

from langchain.chains import LLMChain

from langchain_community.llms import HuggingFace

llm = HuggingFaceHub(repo_id = 'tiiuae/falcon-7b-instruct', huggingfacehub_api_token = huggingfacehub_api_token)

llm_chain = LLMChain(prompt = prompt, llm = llm)

# to begin passing user inputs, we call the .run() method on the chain, passing the input string

question = "What is LangChain ?"

print(llm_chain.run(question))

We’ve run a question and answering task from end-to-end using LangChain.

Chat models using LangChain with OpenAI

LangChain also provides classes specifically designed to work with chat models, like ChatPromptTemplate and ChatOpenAI

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

llm = ChatOpenAI(temperature = 0, openai_api_key = openai_api_key)

prompt_template = ChatPromptTemplate.from_messages(

[

("system", "You are soto sen master Roshi."),

("human", "Respond to the question : {question}")

])

full_prompt = prompt_template.format_messages(question = "What is the sound of one hand clapping ?")

llm(full_prompt)

The ChatOpenAI provides chat-specific functionality than the standard OpenAi class. We can instantiate the model as normal, making sure to provide our OpenAI API key.

To generate a chat prompt template for the model, we can call the .from_messages()method on ChatPromptTemplate.

Inside, we can provide messages to the different OpenAI chat roles, including systems humman, and AI.

Finally, inputs can be passed to the template using .format messages() method. We’ll pass the prompt to the model to view the model response.

Managing chat model memory

in-conversation memory (对话记忆):

- follow-up questions 后续问题

- response iteration and expansion 响应迭代和扩展

- Personalization

Although Langchain allows us to customize and optimize in-conversation chatbot memory, it is still limited by the model’s context window.

An llm’s context window is the amount of input text a model can consider at once when generating a response, and the length of this window varies for different models.

LangChain has a standard syntax for optimizing model memory.

Three LangChain classes for implementing chatbot memory : ChatMessageHistory, ConversationBufferMemory, ConversationSummaryMemory.

ChatMessageHistory: stores the full history of messages between the user and the model. By providing this to the model, we can provide follow-up questions and iterate on the responses messages. Let’s implement this message history in to OpenAI model.

import ChatMessageHistory and ChatOpenAI classes, and then define the LLM.

from langchain.memory import ChatMessageHistory

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI(temperature = 0, opena_api_key = openai_ai_key)

# instantiate ChatMessageHistory and store it as a variable

history = ChatMessageHistory()

# start out conversation with AI message, which can help set the one tone and direction of the conversation

history.add_ai_message("Hi, Ask me anything about Langchain")

history.add_user_message("Describe a metaphor for learning LangChain in one sentence")

# to provide these messages to the model, call the model on the messages attibute of the history object

chat(history.messages)

# When additional user messages are provided, the model bases its response on the full context stored in the conversation history

history.add_user_message("Summarize the preceding sentence in fewer words")

chat(history.messages)

How does chat memory scale with each responses ? 聊天内存如何随着每个响应而扩展?

We can use different tools to manage memory usage in LLM applications and we can even integrate external data to give the models even more context ! These different tools can change and process information at differents speeds as responses are generated by the system, so it’s not the case that one solution fits all cases.

ConversationBufferMemory

the first memory optimization tool

from langchain.memory import ConversationBufferMemory

from langchain_openai import OpenAI

from langchain.chains import ConversationChain

chat = OpenAI(model_name = 'gpt-3.5-turbo-instruct', temperature = 0, openai_api_key = openai_api_key)

# This gives the application a rolling buffer memory containing the last few messages in the conversation 这为应用程序提供了一个滚动缓冲存储器,其中包含对话中的最后几条消息

# Users can specify the number of messages to store with the size argument, and the application will discard older messages as newer ones are added

memory = ConversationBufferMemory(size = 4)

# To integrate the memory type into model, we use a special type of chain for conversations : `ConversationChain`

buffer_chain = ConversationChain(llm = chat, memory=memory, verbose = True)

# Setting verbose equal to true allows the model to output its decisions along with the results 将 verbose 设置为 true 允许模型输出其决策以及结果

# Output :

buffer_chain.predict(input = "Describe a language model in one sentence")

buffer_chain.predict(input = "Describe it again using less words")

buffer_chain.predict(input = "Describe it again fewer words but at least one word")

buffer_chain.predict(input = "What did I first ask you ? I forgot")

ConversationSummaryMemory in Langchain

Summarizing important points from a conversation can also be a good way of optimizing memory

from langchain.memory import ConversationSummaryMemory

# the chat model can remember key pieces of context without needing to store and process the entire conversation history

chat = OpenAI(model_name = 'gpt-3.5-turbo-instruct', temperature = 0, openai_api_key = openai_api_key)

memory = ConversationSummaryMemory(llm = OpenAI(model_name = 'gpt-3.5-turbo-instruct', openai_api_key = openai_api_key))

summary_chain = ConversationChain(llm=chat, memory = memory, verbose = True)

summary_chain.predict(input = "Please summarize the future in 2 sentences")

summary_chain.predict(input = "Why ?")

summary_chain.predict(input = "What will I need to shape this"

Integrating document loaders

lodaing and preparing external data sources for a chat model, the first step of a process called retrieval augemented generation.

Retrieval augemented generation

Pre-trained models do not have access to private or proprietary data sources. We call the process of integrating these data sources as Retrieval Augemented Generation (RAG)

This start with a user query. The user is send to an application created with a framework such as LangChain and transformed it to a vector representation. This model search for the most relevant documents in a database by comparing them to the user’s query. It then ranks the the most relevant results to the user’s query using a chosen distance metric. Finally, the most relevant documents from vector database are concatenated to user query and sent to the model. The model generate a response, which is sent back to the end user through the application.

RAG development steps are threefold

The first is loading documents into LangChain with document loaders. Next, spliting the documents into chunks. Chunks are units of information which we can index and process individually. The last step is encoding and storing these chunks for retrieval, which could use a vector database if that meets the needs of the user case (Document loader, Spliting, retrieval and storage)

Document loader in LangChain

PDF ducument in LangChain :

from langchain_community.document_loaders import PyPDFLoader

loader = PyPDFLoader("attention_is_all_you_nedd.pdf")

data = loader.load()

print(data[0])

Loading csv :

from langchain_community.document_loaders.csv_loader import CSVLoader

loader = CSVLoader(file_path = 'fifa_countries_audience.csv')

data = loader.load()

print(data[0])

Third-party document loader

from langchain_community.document_loaders import HNLoader

loader = HNLoader("http://new.ycombinator.com")

data = loader.load()

print(data[0])

print(data[0].metadata)